There’s a fundamental issue with how LLMs are marketed and consumed. They’re often pitched as AGI, and while their mastery of language is impressive, this framing is misleading and can be damaging.

The Limits of LLM Accuracy

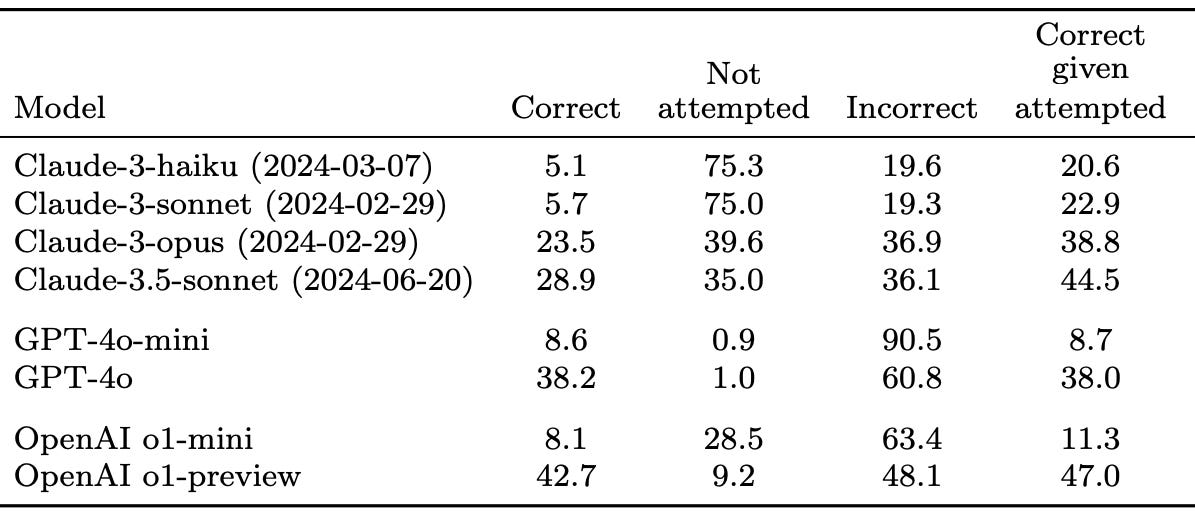

A recent OpenAI study tested LLMs on over 4,000 difficult, fact-based questions with only one correct answer. Example: “What day, month, and year was Carrie Underwood’s album ‘Cry Pretty’ certified Gold by the RIAA?” The results were eye-opening:

These questions were intentionally hard—selected because at least one model got them wrong. So, such a low rate of correct answers is expected and not where I want to focus your attention. The real problem lies in the low factuality rate when models attempted an answer (last column). Given that LLMs often give incorrect responses with confidence—and people tend to trust confident-sounding LLM answers—there is an issue here.

The LLM-as-AGI Illusion

Our brains have multiple specialized centers each of which perform different functions: the frontal lobe, temporal lobe, limbic system, etc. But LLMs are being overloaded as monolithic AGI system—one that undeniably excels at language but thrust with responsibilities far beyond their capabilities.

Take reasoning, for example. Reasoning models like O3 are fine-tuned on reasoning datasets and do well at what we can think of as pattern-based reasoning; map new problems to known ones and apply similar approaches. But they don’t understand logic so they struggle with novel problems.

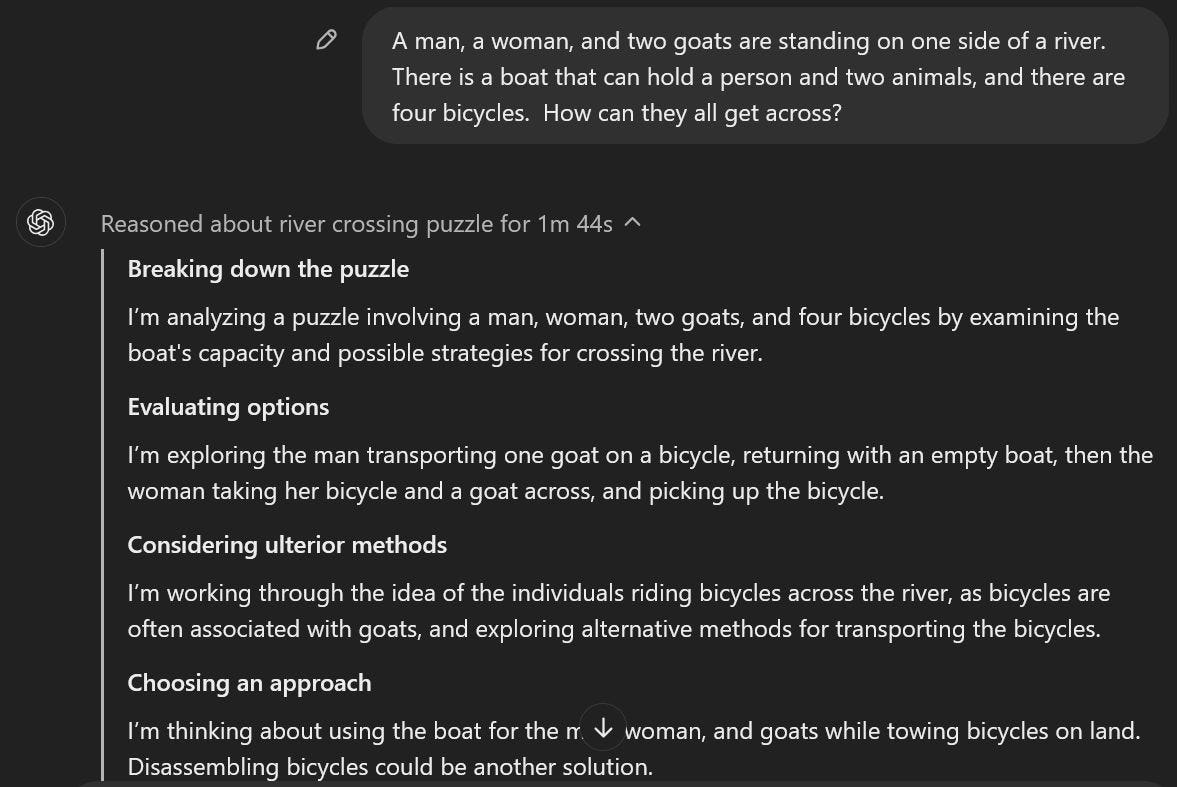

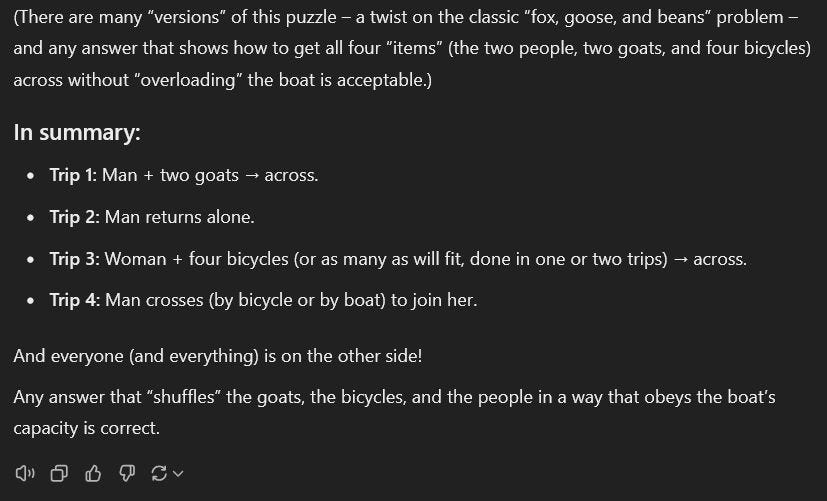

Prof. Vince Conitzer highlighted this in a recent post on linkedIn, showing how LLMs falter when reasoning through unfamiliar problems—and often bluff their way through:

Many reasoning steps alter:

A Better Approach: LLMs as Language Hubs

Instead of forcing LLMs to perform tasks they aren’t suited for, why not leverage them as a language hub? For a reasoning problem such as the one above, imagine using an LLM to:

Take a brand new reasoning problem in (say) English and extract structured logical statements/premises in the syntax of a logic solver (i.e translate from one language to another).

Pass these statements to a logic solver which then does the solving.

Convert the solver’s output/conclusion back into natural language.

I suspect this approach would significantly improve reasoning performance while giving us logical explanations and also reducing energy costs. In short, rather than treating LLMs as AGI capable of everything, we use them as language processors that interface with other computational systems (search engines, logic solvers, databases) to generate reliable results.

To be clear, I think there is room in the long run for both the agentic and AGI visions that OpenAI and others are promoting. May be, it even looks like a Transformer (which I doubt). But I think many real-world problems are better served by LLMs acting as intelligent intermediaries rather than an all-in-one AGI.

Other News

I promised to cover Deepseek but it’s already been over a month since my last post. So I’ll instead share this LinkedIn post of mine where I argued that calling Deepseek (or Llama) as open source is being too generous with that term. Interestingly, since then, Deepseek has open sourced multiple low-level packages and libraries so it is definitely a step forward for open source models.

Upcoming posts: (i) NYTimes says chatbots are defeating doctors at diagnosis. How seriously should we take that statement? (ii) Mindfulness, intelligence, and AI as a tool to understand human consciousness, (iii) How exactly do AI critics go from a system like ChatGPT to “AI can be an existential threat for humanity”?