#9: Gen AI models vs the human brain

How much room for improvement is there and where will those improvements come from?

In today’s post, let’s benchmark where are we today in terms of GenAI models and how much room is there for further improvements? We can think of this question in terms of a comparison of the complexity of the artificial neural network (i.e. AI) vis-a-vis biological neural networks (animal brain), size of their training datasets , and also cost of training current GPTs. A few graphs from a talk by Professor Mirella Lapata provide a striking visualization of the trends.

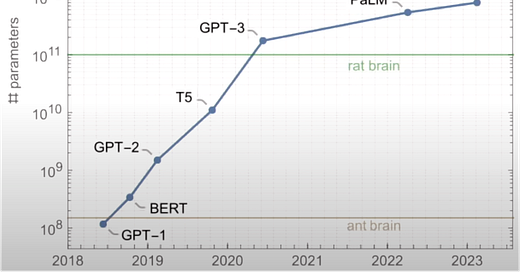

First, let’s see a graph comparing the number of parameters in today’s AI models to biological neural networks (approximated by researchers … we didn’t build these neural networks so we don’t know precisely). GPT-1, from just a few years ago, was below an ant brain but GPT4 already surpasses a rat brain in terms of number of parameters (don’t tell rats that, unlike them, GPT-2 with fewer parameters was already handling language). Notice the Y-axis is on a log scale, so the improvement from GPT-1 to GPT-4 (i.e. gap between ant brain and rat brain) is a phenomenal climb in just a few years. This illustrates the growing sophistication of AI models, but also shows that we remain far from the complexity of a human brain (100X more parameters than GPT-4).

The second graph plots the vast number of words processed by AI during training against benchmarks like the totality of human-written text and the volume of text in English Wikipedia. Here, models like GPT-4 have been trained on datasets far exceeding the content of English Wikipedia, suggesting their potential for deep understanding and generation of human language. Strikingly, we are approaching the limits of all human-written text. Unlike LLMs, human brains also consume and are trained by the vast amounts of visual and audio data we consume in daily life. And text is only a small fraction of all information we consume. The fact that we are running out of text data is also why most companies are focused on multi-modal AI these days.

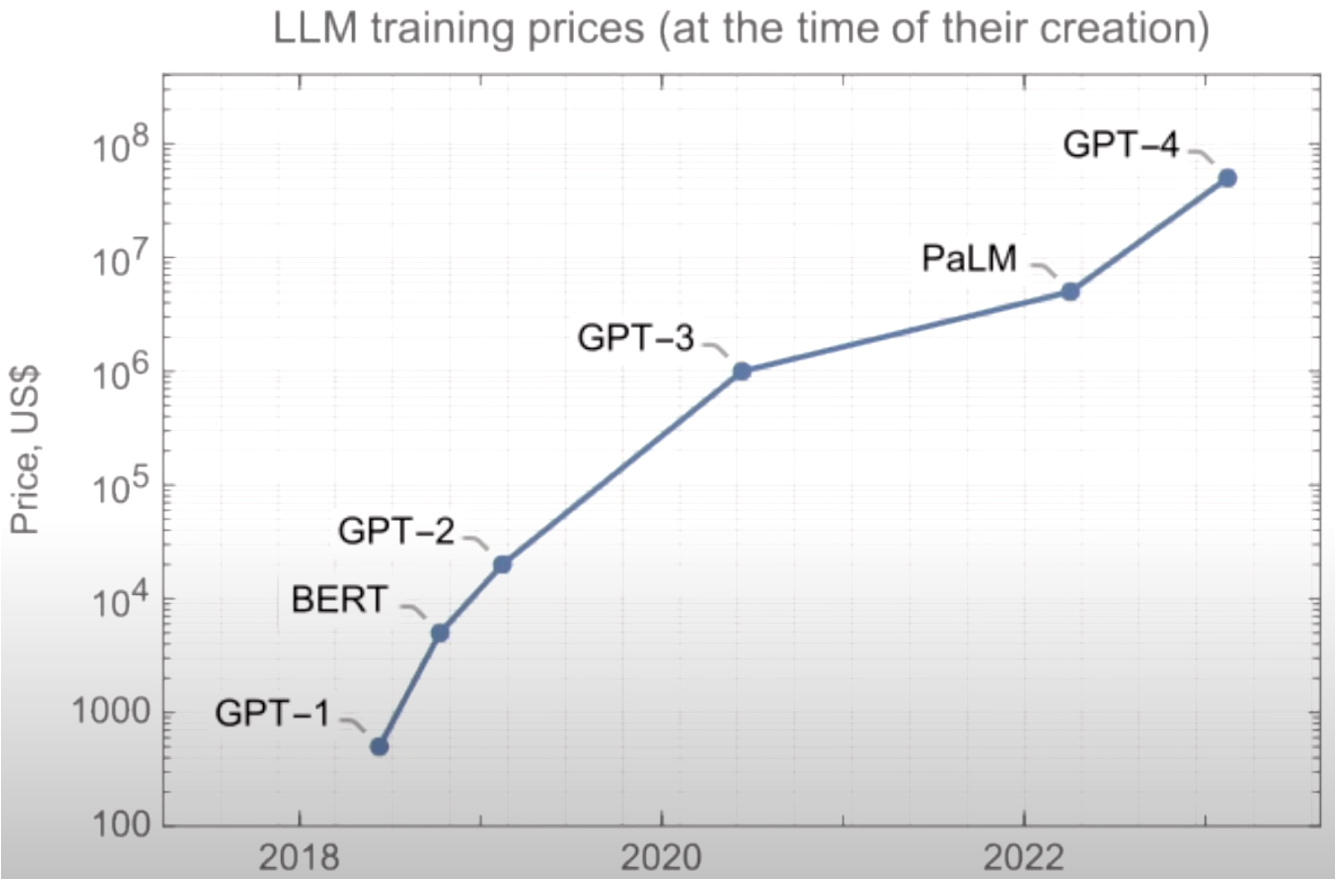

Finally, we turn to the soaring costs of developing these Large Language Models (LLMs). To train on terabyte-scale datasets, significant investment is necessary, with GPT-4's training costs estimated around $100 million. Such figures favor the tech giants, potentially marginalizing smaller firms and concentrating power within a few dominant players, which could restrict innovation and diversity in AI advancements.

Beyond the financial implications, these models carry a hefty environmental footprint. The energy consumption for training and running LLMs is projected to reach astronomical levels, with NVIDIA's AI units expected to consume more energy than many small countries by 2027. This necessitates not just an economic but also an environmental call to action: to design new architectures that can be trained more efficiently than the current architecture whose training cost increases square of the training data (i.e. 10X increase in data produces 100X increase in training costs).

In short, progress is going to come from more efficient model architectures that scale better with dataset size, using multi-modal data as we run out of text data, and increasing parameters in the models. All three are happening as we speak and we should expect big announcements on all three fronts within 12 months.

Good write up kartik simple enough to make sense to me! Is there any estimate/a guess for how long before the gap to human brain is closed? Years? Decades? Or more? And one more major source of environmental impact to worry about

Great Article sir.